Introduction

In the world of data and task automation, managing workflows efficiently is crucial. This is where Apache Airflow comes into play. Imagine having a tool that can help you automate and schedule tasks, coordinate data flows, and handle complex workflows seamlessly. This is exactly what Airflow does, making it an essential tool for modern data engineers and developers. In this article, we’ll take a beginner-friendly journey into the world of Airflow and explore its core concepts.

What is Apache Airflow?

Apache Airflow is an open-source platform designed to help you programmatically author, schedule, manage, and monitor workflows. It provides a way to define, execute, and monitor complex sequences of tasks, often referred to as workflows or data pipelines. Airflow ensures that these tasks are executed in a specific order and under defined conditions.

History Of Apache Airflow

The story of Apache Airflow begins around 2014 when engineers at Airbnb faced a common challenge—managing complex data workflows. The existing tools fell short of their needs, prompting them to create their own solution. The result? A system that allowed them to schedule, monitor, and manage their workflows effectively. This tool was the foundation of what would later become Apache Airflow.

As the tool proved its worth, Airbnb recognized its potential for a broader audience. In 2015, they open-sourced it, allowing developers worldwide to benefit from the power of workflow automation. The tool quickly gained traction in the tech community, attracting contributors and enthusiasts.

Apache Incubator, a hub for nurturing open-source projects, saw the potential in Airflow. In 2016, Airflow entered the Apache Incubator program, solidifying its status as a promising project under the Apache Software Foundation.

In 2019, after demonstrating maturity and a strong community, Apache Airflow graduated from the Apache Incubator, becoming a top-level project within the Apache Software Foundation. This graduation marked a significant milestone, highlighting Airflow’s reliability and its place among respected open-source projects.

As years passed, Apache Airflow continued to evolve. It embraced the cloud era by integrating seamlessly with cloud services like AWS, Google Cloud Platform, and Azure. This opened up new possibilities for orchestrating workflows in cloud environments, giving data engineers greater flexibility and scalability.

When to use Airflow?

Scenario 1: Repetitive Tasks Rule Your Day

Imagine you’re a data analyst handling daily reports that involve extracting data, running scripts, and sending emails. If this routine sounds familiar, Apache Airflow can be your ally. Airflow excels at automating repetitive tasks, letting you set up schedules to run them automatically. You define the tasks, set the execution times, and let Airflow handle the rest. This way, you can bid farewell to the monotony and focus on more exciting endeavors.

Scenario 2: Complex Workflows Need Taming

Picture a puzzle with numerous pieces that need to fit together perfectly. When you have a series of interdependent tasks, like a complex data processing pipeline or an ETL (Extract, Transform, Load) workflow, Airflow comes to the rescue. It allows you to define tasks and their dependencies in a clear visual manner using Directed Acyclic Graphs (DAGs). With Airflow, you ensure that tasks follow a logical sequence and dependencies are met, preventing chaos in your workflow.

Scenario 3: Monitoring Matters

Imagine you’re overseeing a project with multiple contributors, each responsible for specific tasks. Tracking their progress and ensuring timely execution can be overwhelming. Enter Apache Airflow’s monitoring capabilities. It logs every task’s status, execution time, and any failures. This built-in monitoring and alerting system keeps you informed about how well your tasks are performing, enabling you to take corrective action promptly.

Scenario 4: Scalability and Parallelism Are Key

Envision a scenario where the volume of tasks increases significantly. Traditional methods might struggle to cope, leading to bottlenecks and delays. With Airflow, you can breathe easy. It offers parallel execution and allows you to scale your workflow by distributing tasks across multiple workers. This means your tasks can run simultaneously, saving time and ensuring efficient resource utilization.

Scenario 5: Diverse Integrations Await

Consider a world where your tasks involve interacting with various services and tools like databases, APIs, cloud platforms, and more. Apache Airflow is your gateway to seamless integration. It provides a range of ready-to-use operators that connect with popular services. This means you can trigger tasks, pull data, or push outputs across different systems—all within your orchestrated workflow.

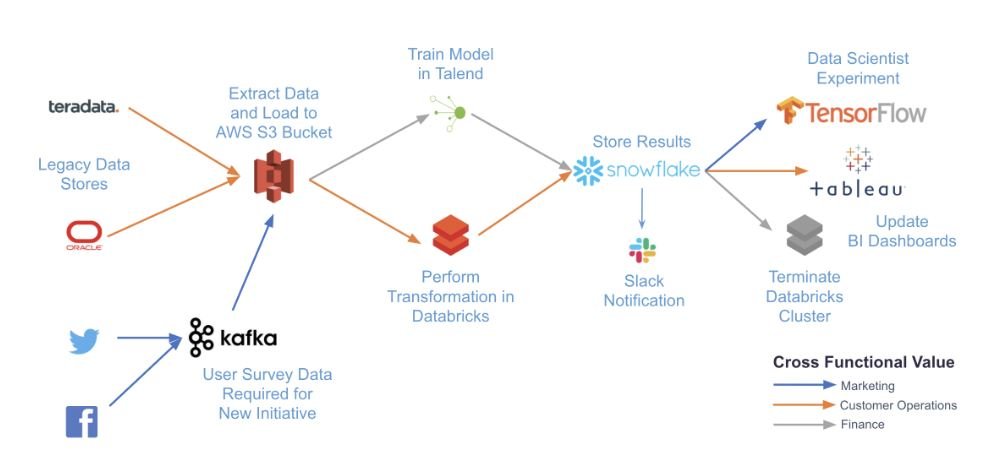

The following diagram illustrates a complex use case that can be accomplished with Airflow:

Key Concepts

Directed Acyclic Graphs (DAGs): At the heart of Airflow are Directed Acyclic Graphs (DAGs). Think of a DAG as a visual representation of your workflow. It’s a collection of tasks that need to be executed, with arrows indicating the order in which they should run. Importantly, there are no loops in a DAG—tasks flow in one direction, ensuring they run without causing circular dependencies.

Tasks: Tasks are individual units of work within a workflow. They can be anything from running a Python script, executing a SQL query, or even sending an email. Each task is defined with specific properties, like the code to run and the dependencies it relies on.

Operators: Operators define how a task will be executed. There are different types of operators tailored to various tasks. For instance, a BashOperator is used to run a bash command, a PythonOperator executes a Python function, and a Sensor waits for a certain condition before proceeding.

Dependencies: Airflow allows you to set dependencies between tasks. This means you can specify that Task B should run only if Task A has successfully completed. This dependency structure helps orchestrate the order in which tasks are executed.

Scheduler: The Airflow scheduler is responsible for determining when and how often each task in a DAG will run. It reads the defined schedules and makes sure tasks are executed as expected.

How Airflow Works?

DAG Definition: You start by defining your workflow as a Python script. This script creates a DAG object, defines tasks using operators, and sets up task dependencies.

Scheduler: The scheduler kicks in and decides when to execute tasks based on their dependencies and schedules. It creates an execution plan for the tasks to follow.

Execution: When a task is ready to run, Airflow spins up an executor to execute the task. The executor is responsible for running the task’s code.

Logging and Monitoring: Airflow logs the progress and outcomes of each task. This allows you to monitor the workflow’s execution and identify any issues.

Web Interface: Airflow comes with a web-based user interface where you can visualize your DAGs, monitor task statuses, and manually trigger tasks if needed.

Benefits of Using Airflow

Automation: Airflow automates repetitive tasks, reducing the need for manual intervention.

Flexibility: It supports a wide range of tasks and can be integrated with various technologies.

Scalability: Airflow can handle workflows of any size and complexity, making it suitable for both small projects and large enterprises.

Monitoring and Alerting: The built-in monitoring tools help you keep track of your workflows and detect issues early.

Conclusion

Apache Airflow simplifies the management of complex workflows by providing an intuitive way to define, schedule, and monitor tasks. Its use of Directed Acyclic Graphs and task dependencies ensures that tasks run in the right order, helping automate data processing, ETL (Extract, Transform, Load) jobs, and more. As you explore Airflow further, you’ll uncover its potential to streamline your work and enhance efficiency in the world of workflow automation.